Last modified February 20, 2025

Cloud Backup

This site has been automatically translated with Google Translate from this original page written in french, there may be some translation errors

Presentation

- a data backup and sharing solution based on a paid cloud kDrive from infomaniak with an automatic synchronization device and manual backups. This remote backup complements the local device and makes it even more robust to a disaster that would destroy both the server and the local backups. This page also details how to set up a kDrive synchronization with rclone.

- an additional backup solution with encrypted stored data using rclone and Google Drive . The latter offers a free solution up to 15GB, which is generally more than enough to store sensitive data (excluding multimedia files). If we can worry about the protection of the data and the use that Google can make of it, the problem does not arise because they are sent encrypted and stored as is on the drive. It is therefore pure backup rather than data sharing.

Looking for cloud storage solutions

The criteria

for searching for a cloud storage solution can vary, for me

they are as follows:

- access for several

people, in this case the restricted family circle, in

addition to the classic backup, this allows you to easily

share data without the need to set up a tedious VPN

solution ;

- a capacity of at least

2TB to be able to store mainly various documents and photos,

for your own needs and those of the circle;

- a synchronization tool

that works under Linux and another under Android ;

- and certain guarantees

of security, confidentiality and non-reuse of my data.

This research also opens up to paid services, considering that any service deserves remuneration and that if it is free, it is because there is a wolf somewhere, nothing is free in this world and in this case it is personal data which is the source of financing.

You won't find here a long list of all the solutions that may exist, there are many sites that compare them with more or less objectivity when they are not barely disguised advertorials. I will however cite this page of the Ubuntu forum and the Wikipedia page, even if they are not completely up to date.

To be very brief in this research phase and at the risk of this newspaper also passing for an advertorial, my choice fell on kDrive from the Swiss company Infomaniak.

Installing and configuring rclone

The two

backup solutions with kDrive and Google mentioned above are based on rclone , the official site is https://rclone.org/ you can install the package of your distribution

or get the latest version here https://github.com/rclone/rclone/releases

tar xvfz

rclone-1.69.1.tar.gz

this gives the rclone-1.69.1 directory in which we will type (after having

previously installed the golang package )

go build

then as root

cp rclone /usr/local/bin

Infomaniak's kdrive solution

kDrive Presentation

Here is a brief

description of the kDrive offer :

- there is a Linux and Android client ;

- their solution for 3TB

for 6 users is one of the cheapest on the market;

- the data is stored on

three different servers and two data centers located in

Switzerland managed by an independent company not listed on

the stock exchange, the tools used are based on free

solutions (see here ) ;

- Swiss legislation in

terms of privacy protection is of a good level, the Data

Protection Act (LPD) soon to come into force significantly

improves current Swiss legislation by drawing heavily on the

European GDPR without being as demanding;

- Infomaniak does not practice cloud analysis to extract exploitable commercial information and

resell it to third parties.

In practice, most of the administration is done from a browser, regardless of the OS. It is from the browser that we will create the tree structure of shared or non-shared files, the shared files will be found in the Common documents directory, it is also from the browser that we will import the files.

For synchronization, you can use the dedicated application provided by infomaniak or rely on rclone.

In the first case we will retrieve the Linux executable in appimage format to launch automatic synchronizations and which works on any recent distribution, including my Mageia 9. With this executable we will designate the directories to synchronize, local side and cloud side, we can launch it in the background to work silently. I chose not to work directly on the files on the cloud, the reference remains the local files and the cloud the backup.

The equivalent also exists on Android , it is a practical way to synchronize in the background the multimedia files which are on the phone and to clean up regularly automatically.

Incidentally, on Android, you will also find a utility for synchronizing contacts and the calendar based on the WebDAV protocol.

On the security side, there is also an application on Android to ensure double authentication. kDrive also offers a whole bunch of services, including collaborative work tools and (large) file transfers.

Backup your kDrive cloud with rclone

First of all, you have to go to the kDrive and note the number that corresponds to the kDrive personal ID in the URL https://ksuite.infomaniak.com/kdrive/app/drive/123456 The kDrive identifier taken as an example here is 123456, the WebDAV address will be davs://123456.connect.kdrive.infomaniak.com/

You will first need to create an application password that you will call webdav as explained on this page https://www.infomaniak.com/fr/support/faq/2855/gerer-les-mots-de-passe-dapplication

We then create the kDrive configuration with rclone by typing rclone config here is the result

Current

remotes:

Name Type

==== ====

google-drive drive

google-secret crypt

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration

password

q) Quit config

e/n/d/r/c/s/q> n

name> kdrive

Option Storage.

Type of storage to

configure.

Enter a string value.

Press Enter for the default ("").

Choose a number from

below, or type in your own value.

1 / 1File

\ "file"

2 / Alias for an

existing remote

\ "alias"

3 / Amazon Drive

\ "amazon

cloud drive"

4 / Amazon S3

Compliant Storage Providers including AWS, Alibaba, Ceph,

Digital Ocean, Dreamhost, IBM COS, Minio, SeaweedFS, and

Tencent COS

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Better

checksums for other remotes

\ "hasher"

7 / Box

\ "box"

8 / Cache a remote

\ "cache"

9 / Citrix

Sharefile

\

"sharefile"

10 / Compress a remote

\

"compress"

11 / Dropbox

\ "dropbox"

12 / Encrypt/Decrypt a

remote

\ "crypt"

13 / Enterprise File

Fabric

\

"filefabric"

14 / FTP Connection

\ "ftp"

15 / Google Cloud

Storage (this is not Google Drive)

\ "google

cloud storage"

16 / Google Drive

\ "drive"

17 / Google Photos

\ "google

photos"

18 / Hadoop distributed

file system

\ "hdfs"

19 / Hubic

\ "hubic"

20 / In memory object

storage system.

\"memory"

21 / Jottacloud

\

"jottacloud"

22 / Koofr

\ "koofr"

23 / Local Disk

\ "local"

24 / Mail.ru Cloud

\ "mailru"

25 / Mega

\ "mega"

26 / Microsoft Azure

Blob Storage

\

"azureblob"

27 / Microsoft OneDrive

\

"onedrive"

28 / OpenDrive

\

"opendrive"

29 / OpenStack Swift

(Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

30 / Pcloud

\ "pcloud"

31 / Put.io

\ "putio"

32 / QingCloud Object

Storage

\

"qingstor"

33 / SSH/SFTP Connection

\ "sftp"

34 / Sia Decentralized

Cloud

\ "sia"

35 / Sugarsync

\

"sugarsync"

36 / Tardigrade

Decentralized Cloud Storage

\

"tardigrade"

37 / Transparently

chunk/split large files

\ "chunker"

38 / Union merges the

contents of several upstream fs

\ "union"

39 / Uptobox

\ "uptobox

"

40 / Webdav

\ "webdav"

41 / Yandex Disk

\ "yandex"

42 / Zoho

\ "zoho"

43 / http Connection

\ "http"

44 / premiumize.me

\"premiumizeme"

45 / seafile

\ "seafile"

Storage> 40

Url option.

URL of http host to

connect to.

Eg https://example.com.

Enter a string value.

Press Enter for the default ("").

url>

https://123456.connect.kdrive.infomaniak.com

Vendor option.

Name of the Webdav

site/service/software you are using.

Enter a string value.

Press Enter for the default ("").

Choose a number from

below, or type in your own value.

1 / Nextcloud

\

"nextcloud"

2 / Owncloud

\

"owncloud"

3 / Sharepoint

Online, authenticated by Microsoft account

\

"sharepoint"

4 / Sharepoint

with NTLM authentication, usually self-hosted or

on-premises

\

"sharepoint-ntlm"

5 / Other

site/service or software

\ "other"

vendor> 5

Option user.

User name.

In case NTLM

authentication is used, the username should be in the

format 'Domain\User'.

Enter a string value.

Press Enter for the default ("").

user>mailconnectiontokDrive

Option pass.

Password.

Choose an alternative

below. Press Enter for the default (n).

y) Yes type in my own

password

g) Generate random

password

n) No leave this

optional password blank (default)

y/g/n> y

Enter the password:

password:

Confirm the password:

password:

Option bearer_token.

Bearer token instead of

user/pass (eg a Macaroon).

Enter a string value.

Press Enter for the default ("").

bearer_token>

Edit advanced config?

y) Yes

n) No (default)

y/n>

--------------------

[kdrive]

type = webdav

url =

https://123456.connect.kdrive.infomaniak.com

vendor = other

user =

maildeconnexionàkDrive

pass = *** ENCRYPTED ***

--------------------

y) Yes this is OK

(default)

e) Edit this remote

d) Delete this remote

y/e/d> y

Current remotes:

Name Type

==== ====

google-drive drive

google-secret crypt

kdrive webdav

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration

password

q) Quit config

e/n/d/r/c/s/q> q

As storage type, you have to put 40 for WebDAV, you indicate the WebDAV URL , the email used to connect to the kDrive account and then the application password defined above. To see if the connection is established, you will type rclone lsd kdrive: and here is the result:

-1 2025-01-02 16:05:36 -1 Common documents

-1 2025-01-01

23:38:26 -1 homepage

and to list an office subdirectory of Common documents we will type rclone lsd kdrive:"Common documents/office". To use rclone you can refer to the paragraph below with a Google share, you just have to change the name of the share with that of kDrive.

- an asynchronous synchronization for my photos that I launch punctually from my PC where they are physically stored;

- a synchronous synchronization that is launched automatically via cron every week for my office data and the pages of my sites.

########################################### # kdrive sync script ##############################################

# log file

# filters file with files to exclude

# definition of sources and destinations to synchronize

echo ""

>> $LOG_FILE

ladate=`date

+"%Y-%m-%d--%T"`

echo "Backup of $date"

>> $LOG_FILE

echo "Office

synchronization" >> $LOG_FILE

/usr/local/bin/rclone

sync $OFFICE_SOURCE "$OFFICE_DESTINATION" --filter-from

$FILTER_DIR --skip-links -v --log-file $LOG_FILE

echo "Homepage

synchronization" >> $LOG_FILE

/usr/local/bin/rclone

sync $SOURCE_HOMEPAGE "$DESTINATION_HOMEPAGE"

--filter-from $FILTER_DIR --skip-links -v --log-file

$LOG_FILE

echo "End of

synchronization" >> $LOG_FILE

cp -R /home/olivier/.config/rclone/ /root/.config/

The second script for asynchronous synchronization of the local directory to the remote directory of photos:#!/bin/bash

###########################################

# kdrive synchronization

script

#############################################

# log file

LOG_FILE="/home/olivier/tmp/rclone-kdrive.log"

# filter file with files

to exclude

FILTER_REP="/home/olivier/Documents/filter-rclone"

# definition of sources

and destinations to synchronize

# photos to synchronize

SOURCE_PHOTOS="/run/media/olivier/Espace5-4To/photos"

DESTINATION_PHOTOS="kdrive:Common documents/Photos"

echo "" >> $LOG_FILE

ladate=`date

+"%Y-%m-%d--%T"`

echo "Backup from $date"

>> $LOG_FILE

echo "Photo

Synchronization" >> $LOG_FILE

/usr/local/bin/rclone copy

$SOURCE_PHOTOS "$DESTINATION_PHOTOS" --filter-from

$REP_FILTRE --skip-links -v --log-file $LOG_FILE

#!/bin/bash

###########################################

# mobile photo

synchronization script

#############################################

# log file

LOG_FILE="/home/olivier/tmp/rclone-kdrive.log"

# filter file with files

to exclude

FILTER_REP="/home/olivier/Documents/filter-rclone"

# definition of sources

and destinations to synchronize

DESTINATION="/run/media/olivier/Espace5-4To/photos/Photos-mobile"

SOURCE="kdrive:Common

documents/Photos/Photos-mobile"

echo "" >> $LOG_FILE

ladate=`date

+"%Y-%m-%d--%T"`

echo "Backup of $ladate"

>> $LOG_FILE

echo "Synchronization of

mobile photos" >> $LOG_FILE

/usr/local/bin/rclone copy

"$SOURCE" "$DESTINATION" --filter-from $REP_FILTRE

--skip-links -v --log-file $LOG_FILE

echo "End of

synchronization" >> $LOG_FILE

And here is the content of the filters file /home/olivier/Documents/filtre-rclone to exclude files or directories starting with a " . ".

- *.bak

- .*

- *~

- ~*

- .*/**

- *.lock

- *.lnk

Now in one script or another, first you have every interest in launching the script dry with a --dry-run in each command to see if it matches what you want. Be careful, the sync command will delete the files in the destination directory that are not in the source directory, to avoid this you will have to put copy instead of sync. For my photo directory, as I have photos from my mobile that are synchronized in parallel, if I put sync it will delete the new photos downloaded from the mobile and which are on the kDrive , hence the copy instead of sync.

This is what it can give for a synchronization with modification

Office

synchronization

2025/01/04 14:00:56 INFO:

notre-base-anout.kdbx: Copied (replaced existing)

2025/01/04 14:01:18 INFO:

Documents-Véronique/Documents: Deleted

2025/01/04 14:01:18 INFO:

Transferred: 57.788 KiB /

57.788 KiB, 100%, 2.451 KiB/s, ETA 0s

Checks: 16857 / 16857,

100%

Deleted: 1 (files), 0

(dirs), 33 B (freed)

Transferred: 1 / 1, 100%

Elapsed time: 22.7s

and without modification

Backup of

2025-01-04--14:32:10

Office synchronization

2025/01/04 14:32:33 INFO:

There was nothing to transfer

2025/01/04 14:32:33

NOTICE:

Transferred: 0 B / 0 B, -,

0 B/s, ETA -

Checks: 16856 / 16856,

100%

Elapsed time: 23.1s

Backup encrypted to Google Drive cloud with rclone

Presentation

rclone offers a solution that is roughly similar to kdrive, it allows you to synchronize a local folder with its cloud counterpart on the command line and can very well be integrated into a bash script with automated operation via cron. The advantage of rclone is that it allows data encryption, which kdrive does not allow at this stage. In addition, rclone synchronizes with a number of clouds, this page presents the synchronization with Google Drive.

Preparing Google Drive Account

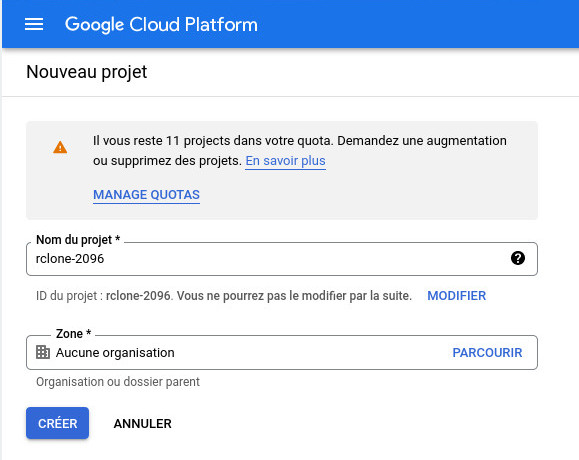

I'm assuming you have a Google account and Google Drive. Some preparation is needed on the latter to give access to rclone. You'll need to access the Google Cloud Platform console. We're going to create a new project that I called rclone and I left the number that Google put

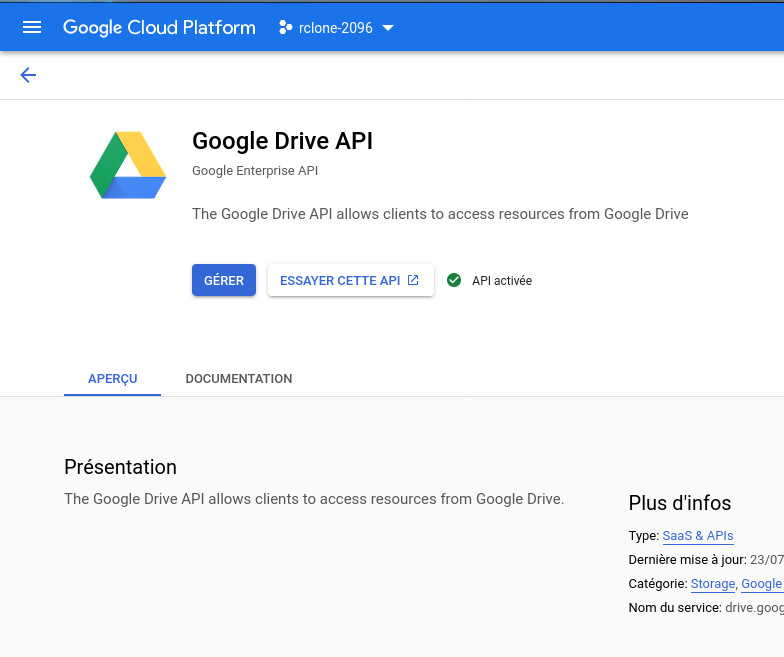

Now we need to check that our project is selected and displayed at the top of the window, we now activate the Google Drive API by clicking on + ACTIVATE APIS and SERVICES and I choose the Google Drive icon. We click on Activate , on the window below this is what it looks like once it is activated

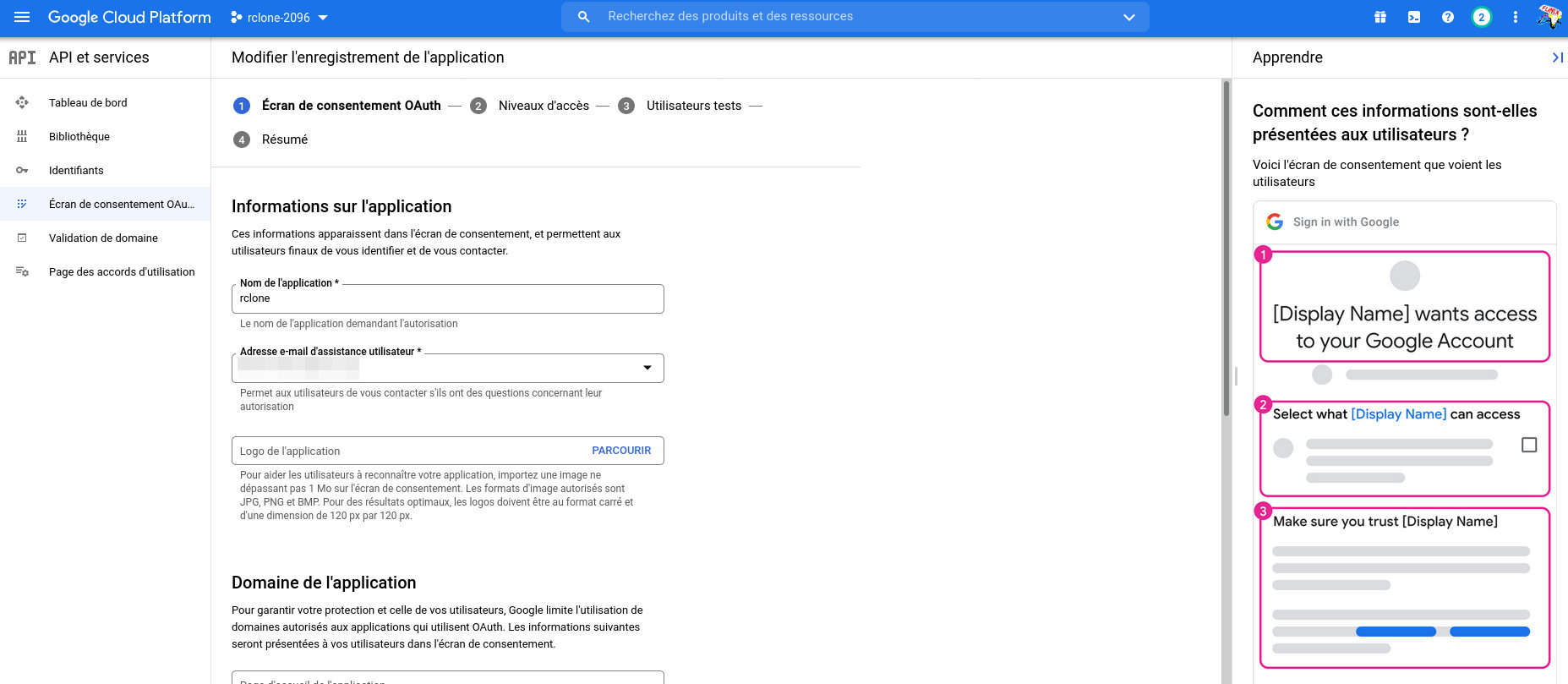

Now in the menu on the left, we click on OAuth Consent Screen, for the user type you have the choice between Internal and External , for Internal which dispenses with validation of the application, you must have a Google Workspace account, it's starting to be a lot, so I chose External. I then filled in at least in screen 1 OAuth Consent Screen , I put the name of the rclone application, my email and the developer's email.

In the 2 Access Level screen, I didn't touch anything. At the 3 Test Users level, I added myself, then we return to the dashboard.

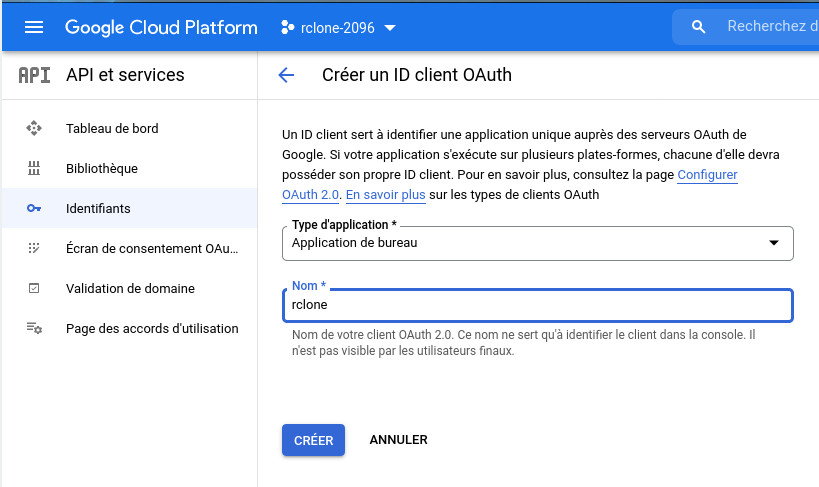

Still in the menu on the left, we click on Identifiers and + CREATE IDENTIFIERS, then OAuth client ID, application type I put Desktop application and I also named it rclone

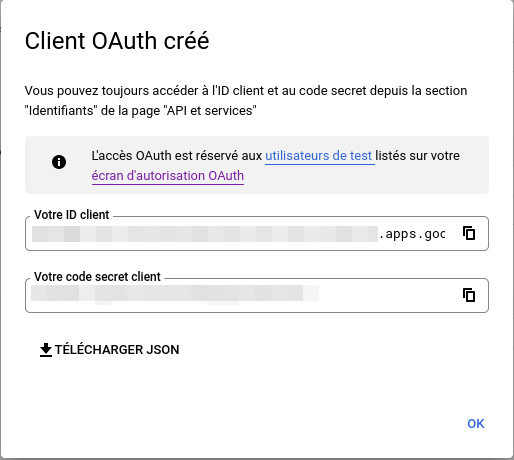

Click on CREATE and a window will give you your client ID and the password which will be useful later to perfect the connection with rclone

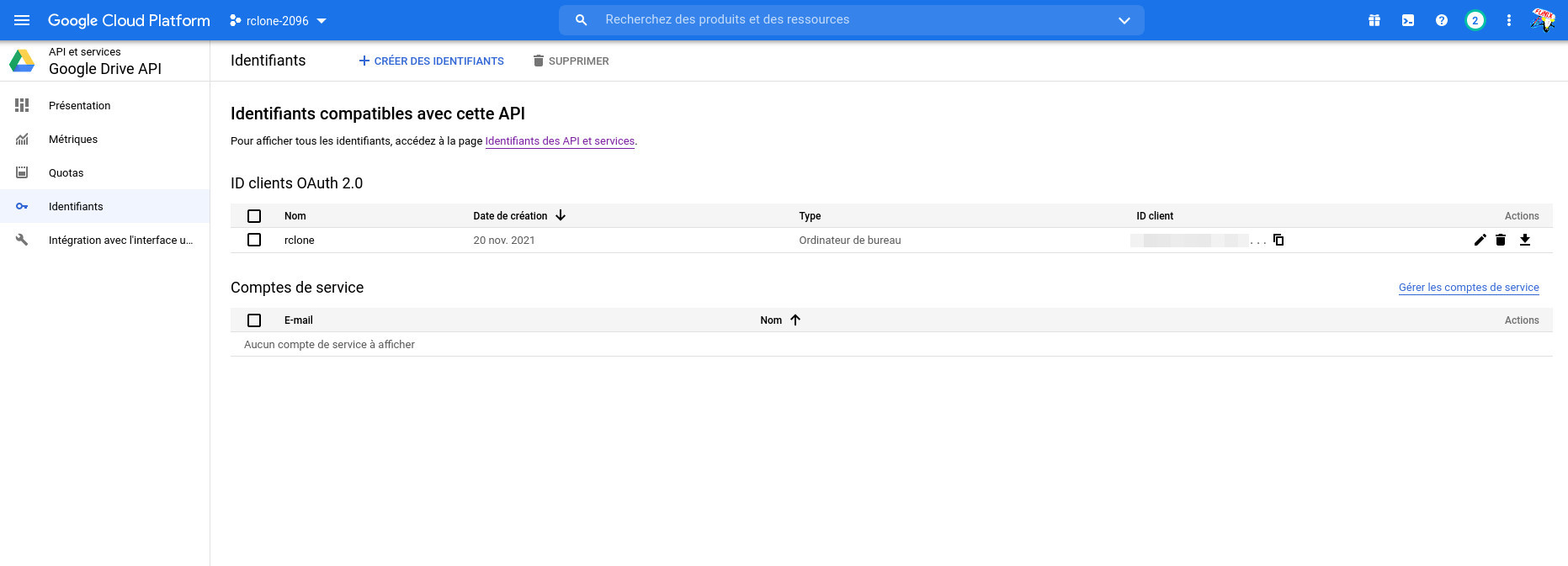

We click OK to close the window and at the dashboard level we select the Google Drive API, we find our OAuth client .

We will now configure it by typing

rclone config

it gives something like this

2021/11/20

18:06:01 NOTICE: Config file

"/home/olivier/.config/rclone/rclone.conf" not found -

using defaults

No remotes found - make

a new one

n) New

remote

s) Set configuration

password

q) Quit config

we will type n to create the new remote space

n/s/q> n

I put as name google-drive

name>

google-drive

Option Storage.

Type of storage to

configure.

Enter a string value.

Press Enter for the default ("").

Choose a number from

below, or type in your own value.

1 / 1File

\ "file"

2 / Alias for an

existing remote

\ "alias"

3 / Amazon Drive

\ "amazon

cloud drive"

4 / Amazon S3

Compliant Storage Providers including AWS, Alibaba, Ceph,

Digital Ocean, Dreamhost, IBM COS, Minio, SeaweedFS, and

Tencent COS

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Better

checksums for other remotes

\ "hasher"

7 / Box

\ "box"

8 / Cache a remote

\ "cache"

9 / Citrix

Sharefile

\

"sharefile"

10 / Compress a remote

\

"compress"

11 / Dropbox

\ "dropbox"

12 / Encrypt/Decrypt a

remote

\ "crypt"

13 / Enterprise File

Fabric

\

"filefabric"

14 / FTP Connection

\ "ftp"

15 / Google Cloud

Storage (this is not Google Drive)

\ "google

cloud storage"

16 / Google Drive

\ "drive"

17 / Google Photos

\ "google

photos"

18 / Hadoop distributed

file system

\ "hdfs"

19 / Hubic

\ "hubic"

20 / In memory object

storage system.

\"memory"

21 / Jottacloud

\

"jottacloud"

22 / Koofr

\ "koofr"

23 / Local Disk

\ "local"

24 / Mail.ru Cloud

\ "mailru"

25 / Mega

\ "mega"

26 / Microsoft Azure

Blob Storage

\

"azureblob"

27 / Microsoft OneDrive

\

"onedrive"

28 / OpenDrive

\

"opendrive"

29 / OpenStack Swift

(Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

30 / Pcloud

\ "pcloud"

31 / Put.io

\ "putio"

32 / QingCloud Object

Storage

\

"qingstor"

33 / SSH/SFTP Connection

\ "sftp"

34 / Sia Decentralized

Cloud

\ "sia"

35 / Sugarsync

\

"sugarsync"

36 / Tardigrade

Decentralized Cloud Storage

\

"tardigrade"

37 / Transparently

chunk/split large files

\ "chunker"

38 / Union merges the

contents of several upstream fs

\ "union"

39 / Uptobox

\ "uptobox"

40 / Webdav

\ "webdav"

41 / Yandex Disk

\ "yandex"

42 / Zoho

\ "zoho"

43 / http Connection

\ "http"

44 / premiumize.me

\

"premiumizeme"

45 / seafile

\ "seafile"

At this point I choose Google Drive which corresponds to number 16

Storage

> 16

Client_id option.

Google Application

Client Id

Setting your own is

recommended.

See

https://rclone.org/drive/#making-your-own-client-id for

how to create your own.

If you leave this blank,

it will use an internal key which is low performance.

Enter a string value.

Press Enter for the default ("").

we will enter the client_id provided by Google Drive

client_id>

a-clientid-number.apps.googleusercontent.com

Client_secret option.

OAuth Client Secret.

Leave blank normally.

Enter a string value.

Press Enter for the default ("").

Here we enter the client_secret provided by Google Drive

client_secret>

an-extended-password

Option scope.

Scope that rclone should

use when requesting access from drive.

Enter a string value.

Press Enter for the default ("").

Choose a number from

below, or type in your own value.

1 / Full access

all files, excluding Application Data Folder.

\ "drive"

2 / Read-only

access to file metadata and file contents.

\"drive.readonly"

/ Access to

files created by rclone only.

3 | These are

visible in the drive website.

| File

authorization is revoked when the user deauthorizes the

app.

\"drive.file"

/ Allows

read and write access to the Application Data folder.

4 | This is not

visible in the drive website.

\"drive.appfolder"

/ Allows

read-only access to file metadata but

5 | does not allow

any access to read or download file content.

\"drive.metadata.readonly"

I choose full access

scope >

1

Option root_folder_id.

ID of the root folder.

Leave blank normally.

Fill in to access

"Computers" folders (see docs), or for rclone to use

a non-root folder as its

starting point.

Enter a string value.

Press Enter for the default ("").

I leave blank

root_folder_id

>

service_account_file

option.

Service Account

Credentials JSON file path.

Leave blank normally.

Needed only if you want

to use SA instead of interactive login.

Leading `~` will be

expanded in the file name as will environment variables

such as `${RCLONE_CONFIG_DIR}`.

Enter a string value.

Press Enter for the default ("").

I leave blank

service_account_file>

Edit advanced config?

y) Yes

n) No (default)

I choose not to go to the advanced settings

y/n> n

Use auto config?

* Say Y if not

sure

* Say N if you are

working on a remote or headless machine

and there I choose auto config

y) Yes

(default)

n) No

y/n> y

2021/11/20 19:26:56

NOTICE: Make sure your Redirect URL is set to

"urn:ietf:wg:oauth:2.0:oob" in your custom config.

2021/11/20 19:26:56

NOTICE: If your browser doesn't open automatically go to

the following link:

http://127.0.0.1:53682/auth?state=VqC4HS6IDo-WLqR-CvjCOw

2021/11/20 19:26:56

NOTICE: Log in and authorize rclone for access

2021/11/20 19:26:56

NOTICE: Waiting for code...

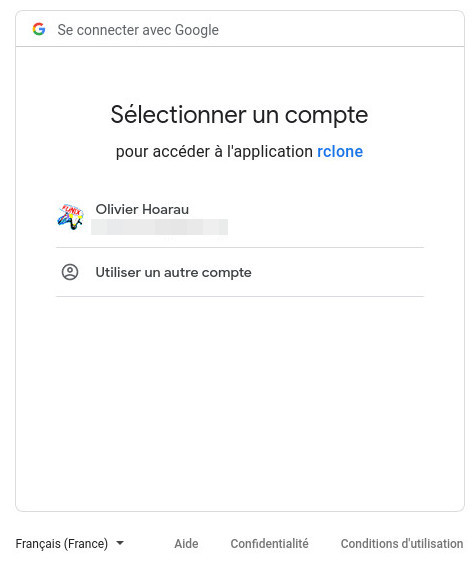

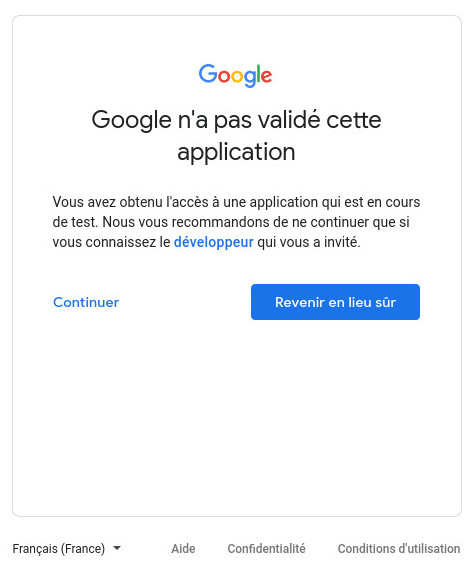

At this point the browser launches and we should have this screen

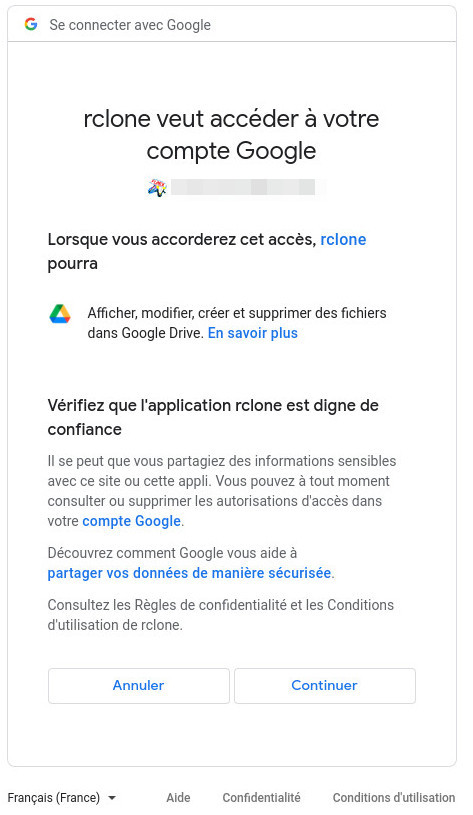

When you choose your Google account , this is what comes up next as a window

we ignore the warning and click on Continue and here is the new window

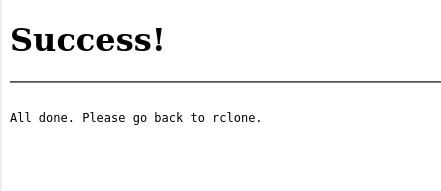

We obviously choose Continue and that's it on that side.

We return to the terminal and we see displayed

2021/11/20

19:27:15 NOTICE: Got code

Configure this as a

Shared Drive (Team Drive)?

y) Yes

n) No (default)

This is personal backup, I choose not to create shared space

y/n> n

--------------------

[google-drive]

type = drive

client_id =

le-clientid.apps.googleusercontent.com

client_secret =

un-mot-de-passe-a-rallonge

scope = drive

root_folder_id = Backup

token =

{"access_token":"un-token-avec-des-caractères-alphanumères","token_type":"Bearer","refresh_token":"1//

encore

plein-charactères-alphanumères","expiry":"2021-11-20T20:33:21.300023553+01:00"}

team_drive =

--------------------

y) Yes this is OK

(default)

e) Edit this remote

d) Delete this remote

click on y to complete the configuration

y/e/d> y

Current remotes:

Name Type

==== ====

google-drive drive

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration

password

q) Quit config

we can leave

e/n/d/r/c/s/q> q

if over time

of using rclone you get the following error

2021/11/28

07:29:09 Failed to create file system for

"google-secret:Backup": failed to make remote

"google-drive:/secret" to wrap: couldn't find root

directory ID: Get

"https://www.googleapis.com/drive/v3/files/root?alt=json&fields=id&prettyPrint=false&supportsAllDrives=true":

couldn't fetch token - maybe it has expired? - refresh

with "rclone config reconnect google-drive:": oauth2:

cannot fetch token: 400 Bad Request

Response: {

"error":

"invalid_grant",

"error_description": "Token has been expired or revoked."

}

the token is perishable and must be renewed regularly, which is a bit annoying when you integrate rclone into a script. To renew it, you will have to type the following command

rclone config reconnect google-drive:

here is the result

Already

have a token - refresh?

y) Yes (default)

n) No

y/n> y

Use auto config?

* Say Y if not

sure

* Say N if you are

working on a remote or headless machine

y) Yes (default)

n) No

y/n> y

2021/11/28 07:42:13

NOTICE: Make sure your Redirect URL is set to

"urn:ietf:wg:oauth:2.0:oob" in your custom config.

2021/11/28 07:42:13

NOTICE: If your browser doesn't open automatically go to

the following link:

http://127.0.0.1:53682/auth?state=eohEThkXmlb5t4W_1jhtdg

2021/11/28 07:42:13

NOTICE: Log in and authorize rclone for access

2021/11/28 07:42:13

NOTICE: Waiting for code...

we are then sent back to the browser to authenticate

2021/11/28

07:42:31 NOTICE: Got code

Configure this as a

Shared Drive (Team Drive)?

y) Yes

n) No (default)

y/n> n

Under Google Cloud Platform, if your rclone application is in test mode, you will need to renew the token every week, for it to be longer, you will need to switch to production mode (below). In this case, when the consent window is displayed on the browser, you are likely to receive a warning to log in because your application has not been validated.

Configure sending and storing encrypted data

At this point we can already see what is at the root of the Google Drive space by typing

rclone lsd google-drive:

and by sending files or directories to a test directory of the drive

rclone copy -v --progress filename google-drive:test

The command below lists the contents of the test directory

rclone lsf google-drive:test

we find the file that we just sent file-name and that we can quite easily view on Google Drive .

but what interests us is the sending and storage of encrypted data. We restart the configuration by typing

rclone config

here is the result

Current

remotes:

Name Type

==== ====

google-drive drive

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration

password

q) Quit config

we find google-drive previously created and we create a new space by typing n

e/n/d/r/c/s/q> n

I call it google-secret

name>

google-secret

Option Storage.

Type of storage to

configure.

Enter a string value.

Press Enter for the default ("").

Choose a number from

below, or type in your own value.

1 / 1File

\ "file"

2 / Alias for an

existing remote

\ "alias"

3 / Amazon Drive

\ "amazon

cloud drive"

4 / Amazon S3

Compliant Storage Providers including AWS, Alibaba, Ceph,

Digital Ocean, Dreamhost, IBM COS, Minio, SeaweedFS, and

Tencent COS

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Better

checksums for other remotes

\ "hasher"

7 / Box

\ "box"

8 / Cache a remote

\ "cache"

9 / Citrix

Sharefile

\

"sharefile"

10 / Compress a remote

\

"compress"

11 / Dropbox

\ "dropbox"

12 / Encrypt/Decrypt a

remote

\ "crypt"

13 / Enterprise File

Fabric

\

"filefabric"

14 / FTP Connection

\ "ftp"

15 / Google Cloud

Storage (this is not Google Drive)

\ "google

cloud storage"

16 / Google Drive

\ "drive"

17 / Google Photos

\ "google

photos"

18 / Hadoop distributed

file system

\ "hdfs"

19 / Hubic

\ "hubic"

20 / In memory object

storage system.

\"memory"

21 / Jottacloud

\

"jottacloud"

22 / Koofr

\ "koofr"

23 / Local Disk

\ "local"

24 / Mail.ru Cloud

\ "mailru"

25 / Mega

\ "mega"

26 / Microsoft Azure

Blob Storage

\

"azureblob"

27 / Microsoft OneDrive

\

"onedrive"

28 / OpenDrive

\

"opendrive"

29 / OpenStack Swift

(Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

30 / Pcloud

\ "pcloud"

31 / Put.io

\ "putio"

32 / QingCloud Object

Storage

\

"qingstor"

33 / SSH/SFTP Connection

\ "sftp"

34 / Sia Decentralized

Cloud

\ "sia"

35 / Sugarsync

\

"sugarsync"

36 / Tardigrade

Decentralized Cloud Storage

\

"tardigrade"

37 / Transparently

chunk/split large files

\ "chunker"

38 / Union merges the

contents of several upstream fs

\ "union"

39 / Uptobox

\ "uptobox"

40 / Webdav

\ "webdav"

41 / Yandex Disk

\ "yandex"

42 / Zoho

\ "zoho"

43 / http Connection

\ "http"

44 / premiumize.me

\

"premiumizeme"

45 / seafile

\ "seafile"

This time I choose a drive encrypted with 12

Storage>

12

Option remote.

Remote to

encrypt/decrypt.

Normally should contain

a ':' and a path, eg "myremote:path/to/dir",

"myremote:bucket" or

maybe "myremote:" (not recommended).

Enter a string value.

Press Enter for the default ("").

I indicate here the name of the Google Drive previously created and the directory where the encrypted files will be stored

remote>

google-drive:/secret

Option

filename_encryption.

How to encrypt the

filenames.

Enter a string value.

Press Enter for the default ("standard").

Choose a number from

below, or type in your own value.

/ Encrypt

the filenames.

1 | See the docs

for the details.

\"standard"

2 / Very simple

filename obfuscation.

\"obfuscate"

/ Don't

encrypt the file names.

3 | Adds a ".bin"

extension only.

\"off"

I choose to encrypt files

filename_encryption

> 1

Directory_name_encryption option.

Option to either encrypt

directory names or leave them intact.

NB If

filename_encryption is "off" then this option will do

nothing.

Enter a boolean value

(true or false). Press Enter for the default ("true").

Choose a number from

below, or type in your own value.

1 / Encrypt

directory names.

\"true"

2 / Don't encrypt

directory names, leave them intact.

\"false"

On the other hand, I choose to leave the name of the directories clear.

directory_name_encryption>

2

Option password.

Password or pass phrase

for encryption.

Choose an alternative

below.

y) Yes type in my own

password

g) Generate random

password

I choose to put my own password (to be kept precisely with a solution like KeePassXC )

y/g> y

Enter the password:

password:

Confirm the password:

password:

Option password2.

Password or pass phrase

for salt.

Optional but

recommended.

Should be different to

the previous password.

Choose an alternative

below. Press Enter for the default (n).

y) Yes type in my own

password

g) Generate random

password

n) No leave this

optional password blank (default)

I choose not to put a second password

y/g/n>

Edit advanced config?

y) Yes

n) No (default)

y/n> n

--------------------

[google-secret]

type = crypt

remote =

google-drive:/secret

directory_name_encryption = false

password = *** ENCRYPTED

***

--------------------

y) Yes this is OK

(default)

e) Edit this remote

d) Delete this remote

y/e/d> y

and there it is finished we see the Google Drive drive in clear and the encrypted drive

Current

remotes:

Name Type

==== ====

google-drive drive

google-secret crypt

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration

password

q) Quit config

e/n/d/r/c/s/q> q

Now when I edit the file ~/.config/rclone/rclone.conf

[google-drive]

type = drive

client_id =

clientid-number.apps.googleusercontent.com

client_secret =

long-password

scope = drive

root_folder_id =

token =

{"access_token":"lots-of-alphanumeric-characters","token_type":"Bearer","refresh_token":"lots-of-alphanumeric-characters","expiry":"2021-11-20T20:33:21.300023553+01:00"}

team_drive =

[google-secret]

type = crypt

remote =

google-drive:/secret

directory_name_encryption = false

password = some-password

Use

Presentation

- copy to copy directories

- sync to synchronize two directories

- ls to list the contents of a directory

- check to verify that two directories are identical

and for the sub-options for the transfer options ( copy and sync ) which essentially have performance considerations, we can find (also non-exhaustive list)

The full list can be found here. There are also a number of filters that I will not detail here as there are so many that are described on this page that allow you to de/select certain files according to their name, size or age.

Copy

Now to copy encrypted data to the drive, simply type

rclone copy Assurance google-secret:/Assurance

That is to say from the source directory Assurance to the destination directory google-secret:/Assurance. The encrypted files with their encrypted name will therefore be found in the directory secret/Assurance

Restoration with data decryption is done as follows:

rclone copy --progress google-secret:/Assurance ~/restoration

We simply reverse the source directory and the destination directory.

List a tree

rclone ls google-drive:secret/Assurance

I will see my files with their encrypted name

10755

tk027u5lifj4njm1aeenhgs0pj7iv5n9aap1fangpdrsaevagdssqegcr1cpo9v0nuao4cli4opj4

978668

2bov2ml822nn5o05vo3v9h4tv8f80484807e9leel5u5kgcdk98g

1281 lhm2fpk2b5j3hn7jodptealt2c

1744221

gmf/f306dm735rmlv6mijhoeet2vn0

341061gmf/e1b6vekao1dvk5edkku932g4635b17tpreqp57k9s4dufuvkm8drqp48nr3a73m0d1c5ujtkohmnqj16dfp889u2i2tralp9dpa8vg8

on the other hand by typing the command below, I will see the name of the files in clear text

rclone ls google-secret:/Assurance

note that rclone lsl will give you more information including the date

of the copy

Synchronize directories

To synchronize two directories we will use the following syntax

rclone sync source-directory google-drive:destination-directory

it is the source directory which is the reference directory, it is advisable not to evolve the destination directory in parallel to avoid conflicts. It is useful to launch it with the -i option (interactive mode) to ensure its operation and avoid an unfortunate copy/deletion.

There is also the --dry-run option to do a dry sync to see what will be copied and what will be deleted.

Check that two directories are identical

To check that the two directories are identical, type

rclone check source-directory google-drive:destination-directory

Mount a drive directory

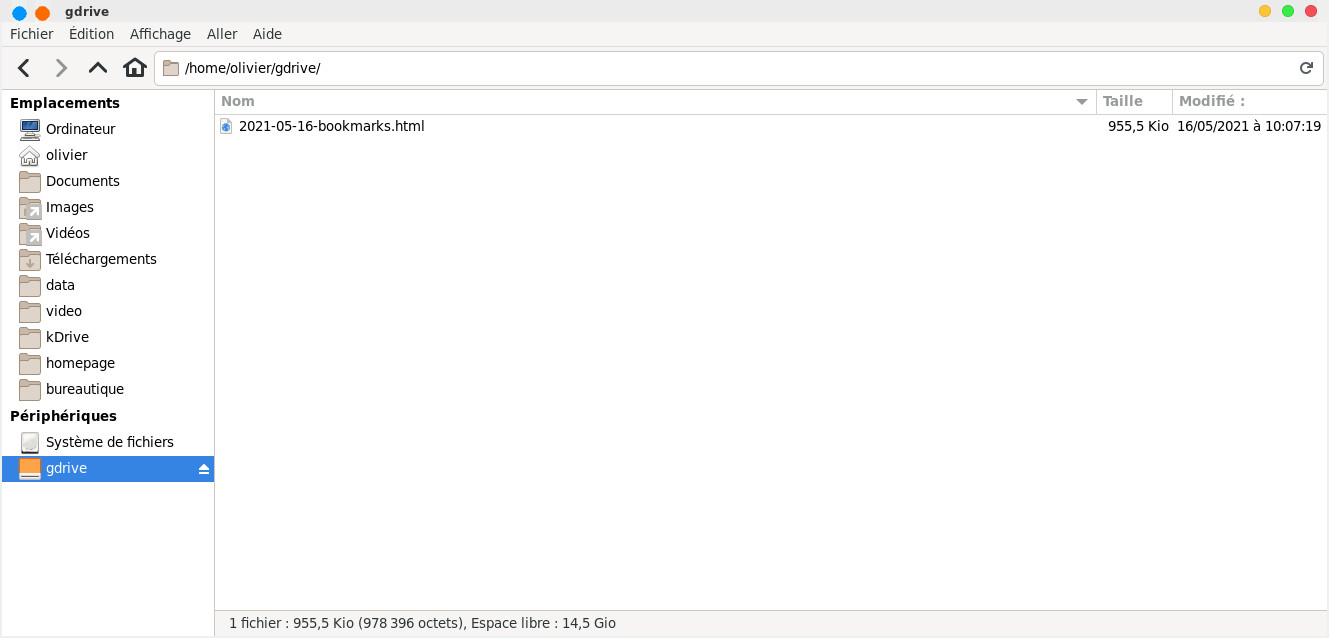

A few other commands in bulk, you can locally mount your drive in a local directory by typing (here it will mount the test directory of the drive in a local directory gdrive located in the homedirectory)

rclone mount google-drive:test ~/gdrive/

the drive is mounted and can be used like any local space to move, delete or copy files

we can also mount the encrypted drive and the files will be seen locally in clear text.

You just have to type CTRL+C to interrupt the editing

You can also share at https://drive.google.com/drive/my-drive

rclone embedded in a bash script

Now you are free to integrate the command into a bash script that can be launched with cron. Here are two examples

A simple script to make a copy

#!/bin/bash

LOG_FILE="/var/log/rclone.log"

REP_FILTRE="/home/olivier/Documents/rep-filtre.txt"

DESTINATION="google-secret:Backup"

DESTINATION_ARCHIVE="google-secret:Backup-archive"

/usr/local/bin/rclone -v

--skip-links --update\

copy /

$DESTINATION\

--filter-from $FILTER_DIR --log-file $LOG_FILE

and the variant to perform a synchronization

#!/bin/bash

LOG_FILE="/home/olivier/tmp/sync-rclone.log"

REP_FILTRE="/home/olivier/Documents/rep-filtre.txt"

DESTINATION="google-secret:Backup"

DESTINATION_ARCHIVE="google-secret:Backup-archive"

ladate=`date

+"%Y-%m-%d--%T"`

SUFFIX_DATE=.$ladate

/usr/local/bin/rclone -v

--skip-links \

--backup-dir $DESTINATION_ARCHIVE --suffix $SUFFIX_DATE\

sync /

$DESTINATION --filter-from $FILTER_REP --log-file

$LOG_FILE

Note that this script will archive old versions by date in a Backup-archive directory. The rep-filtre.txt file may look like this

- /mana/data/bureautique/documents/**

- /mana/data/bureautique/Santé/Olivier/Accident\ Vélo/photos/**

- /mana/data/bureautique/Doc-Funix/funix/old/**

- /mana/data/bureautique/Doc-Funix/pdf/old/**

- /mana/data/bureautique/Doc-Funix/pdf/ebook/**

- /mana/data/bureautique/Généalogie/ancestris/siteweb/**

- /mana/data/bureautique/rep-filtre.txt

- /mana/data/bureautique/arborescence

- /mana/data/bureautique/**/.directory

#directories and files to include

+ /mana/data/bureautique/Finance/**

+ /mana/data/bureautique/Assurance/**

+ /mana/data/office/Health/**

+ /mana/data/office/Mail/**

+ /mana/data/office/Genealogy/**

+ /mana/data/office/Home/**

+ /mana/data/office/Identity/**

+ /mana/data/office/document\ Véronique/**

+ /mana/data/office/Leisure/**

+ /mana/data/office/Car/**

+ /mana/data/office/Doc-Funix/**

#we exclude everything else

- /**

Be careful, the order is important, we put the directories/files to exclude first, those to include next and we finish by excluding everything else, the reading is done from top to bottom, as soon as a condition is met, it does not go further. Note that the ** includes the files and subdirectories recursively, which is not the case for the simple * .

This file will be used for both copying and synchronization. As a reminder the syntax is described here .

The files will be saved under Google Drive under Backup/mana/data/bureautique keeping their path. The archives will be under /Backup-archive/mana/data/bureautique. with the date suffix at the end of each file in this format .2021-12-04--07:55:25.

It is obviously possible to proceed in relative terms as well, in this case you will need to be careful to modify the inclusions/exclusions file and the source variable of the sync or copy option .

You might want to run the script dry with --dry-run to see if it does what you want.

For a

synchronization to take place every day now, assuming that

your file is called sync-rclone and is located under /usr/local/bin so that it is launched by root every night at

4am, we will type as root

crontab -u olive tree -e

we indicate

00 04 * * * /home/olivier/bin/sync-rclone

and here is the final result

no crontab

for olivier - using an empty one

crontab: installing new

crontab

It will look

something like this when

synced and archived

2021/12/04

16:35:09 INFO : mana/data/bureautique/Finance/les

comptes.ods: Moved (server-side) to:

mana/data/bureautique/Finance/les

comptes.ods.2021-12-04--16:34:59

2021/12/04 16:35:35 INFO

: mana/data/bureautique/Finance/les comptes.ods: Copied

(new)

2021/12/04 16:35:35 INFO

:

Transferred: 2.608 MiB /

2.608 MiB, 100%, 87.635 KiB/s, ETA 0s

Checks: 1817 / 1817,

100%

Renamed: 1

Transferred: 1 / 1, 100%

Elapsed time: 36.5s

and otherwise

2021/12/05

07:09:25 INFO: There was nothing to transfer

2021/12/05 07:09:25

INFO:

Transferred: 0 B / 0 B,

-, 0 B/s, ETA -

Checks: 1816 / 1816,

100%

Elapsed time: 19.5s

The problem is that Google Drive is limited to 15GB in its free version, so it will probably be necessary to clean up from time to time if you don't want to upgrade to the paid version.

To delete, for example, all files older than 90 days in the Backup-archive directory of the drive, type

rclone delete google-secret:Backup-archive --min-age 90d -v

it will give a result like this

2022/12/25

16:27:31 INFO:

ultra/data/homepage/www.funix.org/en/linux/cinelerra_fichiers/translate_n_data/frame_back.gif.2022-12-25--15:48:18:

Deleted

2022/12/25 16:27:31

INFO:

ultra/data/homepage/www.funix.org/en/linux/base-video_fichiers/translate_n_data/stock_frame_logo.gif.2022-12-25--15:48:18:

Deleted

2022/12/25 16:27:31

INFO:

ultra/data/homepage/www.funix.org/en/linux/base-video_fichiers/translate_n_data/frame_remove.gif.2022-12-25--15:48:18:

Deleted

2022/12/25 16:27:31

INFO:

ultra/data/homepage/www.funix.org/en/linux/base-video_fichiers/translate_n_data/frame_back.gif.2022-12-25--15:48:18:

Deleted

the min-age parameter asks for a duration which takes the form of d (for day), w (week), M (month), y (year).

To systematically delete files older than a year with each backup, we can complete the script above by adding

/usr/local/bin/rclone delete $DESTINATION_ARCHIVE --min-age 12M -v --log-file $LOG_FILE

| [ Back to FUNIX home page ] | [ Back to top of page ] |

Welcome

Welcome Linux

Linux Unix

Unix Download

Download